Why Artificial Intelligence is a Gateway-Drug to Nuclear Energy

New research explains why tech giants have scrapped their 100% renewables targets and recently embraced nuclear energy.

As artificial intelligence (AI) models and data centers proliferate, the energy needs behind these computational giants have become a pivotal concern for tech companies. Nvidia, one of the biggest players in AI hardware, has recently recognized nuclear energy as a promising zero-emission solution for meeting the booming power needs of AI operations.

Recent research from NTNU dives deep into how AI's energy consumption patterns align better with baseload power sources like nuclear energy. Unlike renewables such as solar and wind, nuclear power offers a stable and continuous energy supply on its own — something AI data centers, with their mission-critical nature, absolutely need.

Given the immense capital expenditures required for AI infrastructure, where leading AI servers can cost millions of dollars per unit, any disruption in power supply translates into significant revenue loss. As a result, the stability of the power supply becomes a far greater priority than its cost.

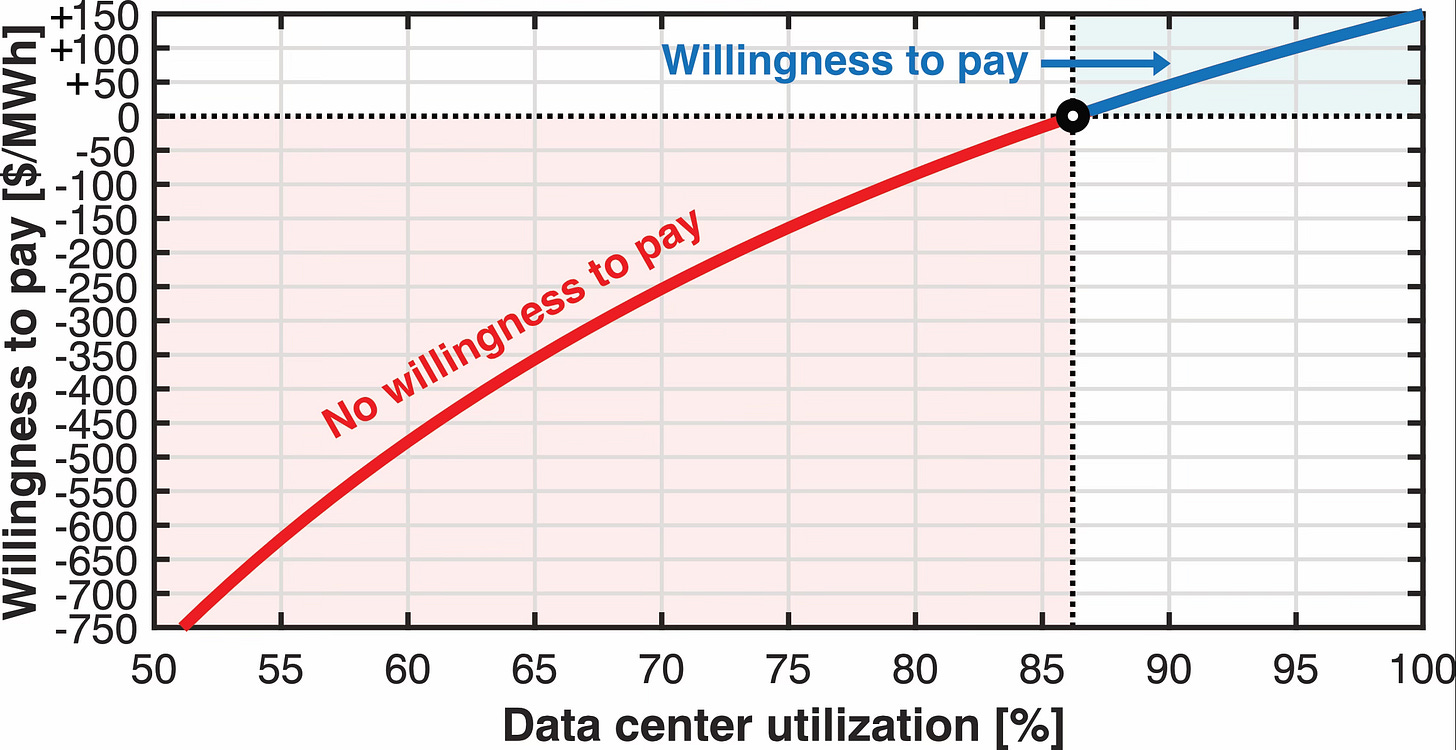

As shown in Figure 1, AI data centers are willing to pay premium prices for electricity when their utilization, so-called load factor, is near or at 100%, as this maximizes their profitability. However, as utilization drops below 86%, their willingness to pay decreases rapidly to zero, highlighting how critical uninterrupted power is for these operations.

The conclusion is clear: AI data centers prioritize stability and uptime over a cheap but fluctuating power supply. Nuclear energy, with its consistent output, aligns perfectly with the needs of AI data centers — offering a solution that intermittent renewable energy sources, such as solar and wind, struggle to provide on their own.